EvSLAM Challenge

The IROS 2025 EvSLAM Challenge focuses on leveraging the high temporal resolution of event cameras to enhance SLAM/VO (VIO) systems. We hope participants will develop innovative solutions that apply the unique capabilities of event cameras to address challenges faced by four common types of platforms in dynamic environments.

Awards

- First Prize: 3000 RMB + Certificate

- Second Prize: 2000 RMB + Certificate

- Third Prize: 1000 RMB + Certificate

Award Winners 🏆

🥇 First Prize

NUDT-VCL, National University of Defense Technology

Members: Jiaxiong Liu, Yang Yi, Zhen Tan, Qiang Gao

Advisors: Prof. Dr. Hui Shen, Prof. Dr. Xieyuanli Chen

🥈 Second Prize

Mobile Robotics Lab, ETH Zürich

Members: Yannick Burkhardt, Sebastián Barbas Laina, Simon Boche

Advisor: Prof. Dr. Stefan Leutenegger

🥉 Third Prize

NAIL, Hunan University

Members: Sheng Zhong, Junkai Niu

Advisor: Prof. Dr. Yi Zhou

The prizes for the EvSLAM Challenge are kindly sponsored by Synsense and iniVation.

Tracks

- Event-Only: SLAM/VO (VIO) systems using event cameras as the visual input.

- Event + Grayscale: SLAM/VO (VIO) systems using event cameras combined with grayscale images as the visual input.

Data

We provide seven sequences (seq001 – seq007) for algorithm evaluation. In addition, a separate test sequence (mecanum-test) with ground truth is included to facilitate algorithm debugging.

You can download the data through this link.

Evaluation

In this challenge, we will adopt two evaluation metrics, i.e., Absolute Trajectory Error (ATE) and Area Under Curve (AUC), for the quantitative assessment of state estimation results.

- ATE: The ATE quantifies the global consistency of the estimated trajectory by comparing it to the ground truth. It is particularly useful for assessing long-term drift and overall accuracy throughout the trajectory. ATE is defined as:

-

$$

\text{ATE} = \frac{1}{N} \sum_{i=1}^{N} \left\| \mathbf{p}_i - \mathbf{p}_{i,\text{gt}} \right\|.\tag{1}

$$

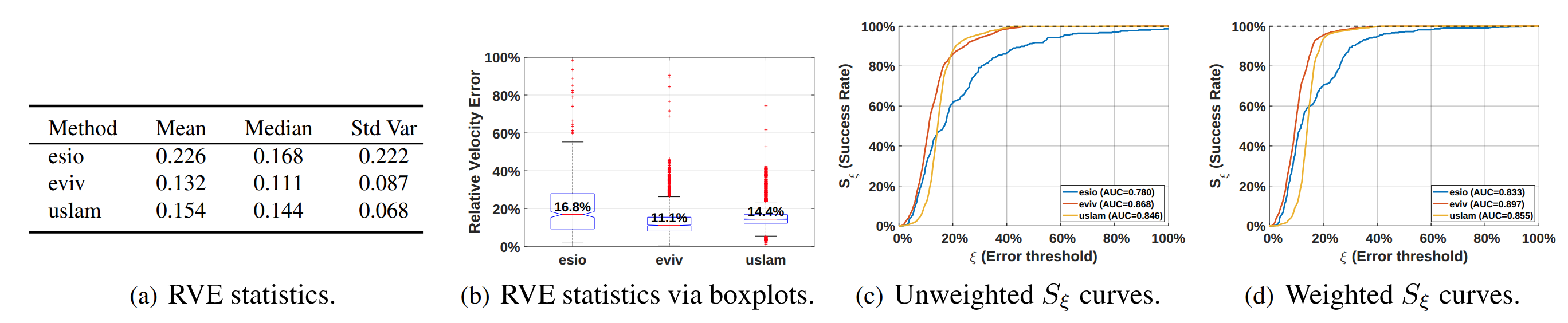

- AUC: Accurate velocity estimation is crucial for closed-loop control, yet no standardized metric assesses linear velocity error. While Relative Pose Error (RPE) translation approximates velocity error, discretization artifacts and false positives (low RPE despite poor velocity estimates) limit its validity. Common metrics like Absolute (AVE) and Relative Velocity Error (RVE) report statistical summaries but ignore speed-dependent error severity and lack ranking clarity. We propose a speed-weighted success metric:

-

$$

S_{\xi} = \frac{\sum_{i}^{N} \left\| \mathbf{v}_{\text{gt},i} \right\| \cdot \delta(e_{\text{RV},i} < \xi)}{\sum_i^{N} \left\| \mathbf{v}_{\text{gt},i} \right\|}.\tag{2}

$$

-

where $\delta(\cdot)$ is the Dirac function. RVE thresholds \(\xi\) define success, weighted by ground truth speed to emphasize high-velocity performance. The Area Under Curve (AUC) of $S{_\xi}$ provides a unified ranking (Fig. 1, with weighted curves (Fig. 1(d)) favoring high-speed accuracy over unweighted ones (Fig. 1(c)).

For the ranking, we calculate the total AUC and total ATE across all sequences. The method with the highest AUC score ranks first. In case of a tie in AUC, the method with the smaller ATE wins.

Submit

The trajectory from the left event camera is expected. For each of the 7 trajectories in the testing data, compute the camera poses, and save them in the text file with the name seq00X.txt. Put all 7 files into a zip file with the following structure:

EvSLAM_Results.zip/

|-- seq001.txt

|-- seq002.txt

|-- ...

|-- seq007.txt

Note that our automatic evaluation tool expects the estimated trajectory to be in this format.

-

Each line adheres to the format:

timestamp tx ty tz qx qy qz qw vx vy vz. -

timestamp: Specifies the pose time, and must match one-to-one with entries in the Reference timestamps file. -

tx ty tz: Represent the 3D position of the event camera's optical center with respect to the world coordinate frame, expressed in meters. -

qx qy qz qw: Represent the orientation of the event camera's optical center relative to the world coordinate frame, expressed as a unit quaternion. -

vx vy vz: Denote the linear velocity of the event camera's optical center, expressed in the camera-centric coordinate frame.

Terms

All participants are required to submit the following materials:

- A video demonstration showcasing the practical performance of the proposed SLAM/VIO system.

- A technical report briefly describing the methodology, system design, and experimental results.

This EvSLAM Benchmark is governed by the NAIL Lab.