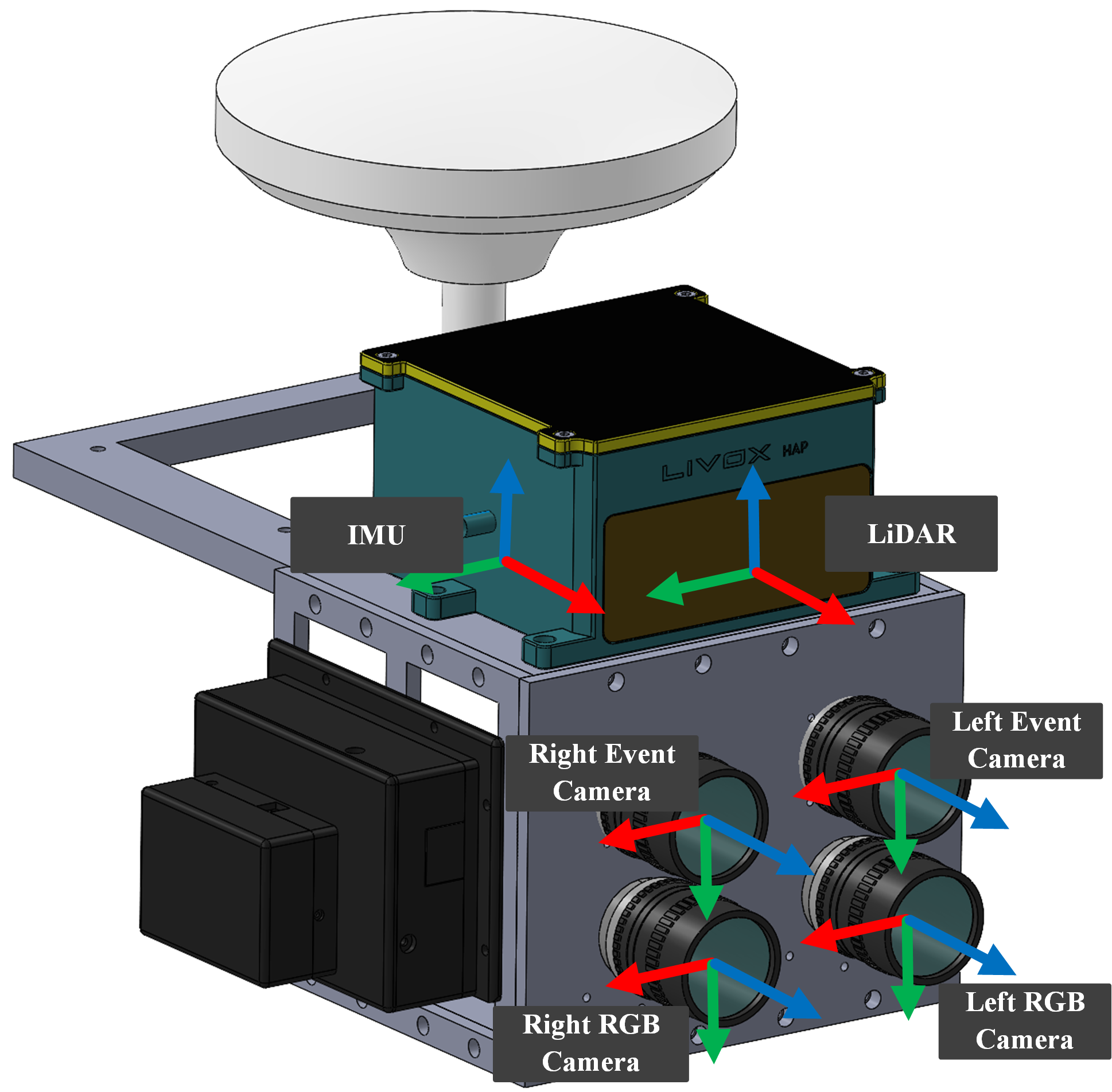

Fig.1: llustration of the CAD model of the sensor suite. The axes of all sensors are labeled and color-coded as follows: Red for X, Green for Y, and Blue for Z.

Note: The intrinsic and extrinsic parameters of the sensors have already been written into the HDF5 file. If you are only using our dataset, there is no need to download these calibration results again.

Intrinsic and Extrinsic Calibration of Cameras

We use the Kalibr toolbox to estimate the intrinsic and extrinsic parameters for the two camera pairs separately. Since the Kalibr toolbox requires image-format data for camera calibration, we utilize the Simple Image Reconstruction library 1 to recover frames from raw event data. For accurate extrinsic parameters estimation, we first synchronize the event and RGB cameras using the synchronization scheme, and then perform image reconstruction at the midpoint of each image’s exposure time.

2024-07-15

- 8-mm Lens Camera Pair: results | raw bag (4.63 G)

- 16-mm Lens Camera Pair: results | raw bag (5.81 G)

2024-08-08

- 8-mm Lens Camera Pair: results | raw bag (7.21 G)

- 16-mm Lens Camera Pair: results | raw bag (6.5 G)

2024-09-25

- 8-mm Lens Camera Pair: results | raw bag (6.14 G)

- 16-mm Lens Camera Pair: results | raw bag (7.15 G)

Extrinsic Calibration of Camera and IMU

For the extrinsic calibration between the cameras and the IMU, we effectively stimulate the sensor suite along the six degrees of freedom of the IMU in front of an AprilTag grid pattern and record the corresponding sequences. The Kalibr toolbox is then used to perform the extrinsic calibration.

2024-07-15

- 8-mm Lens RG Camera with IMU: results | raw bag (2.13 G)

- 16-mm Lens RG Camera with IMU: results | raw bag (1.62 G)

2024-08-08

- 8-mm Lens RG Camera with IMU: results | raw bag (2.21 G)

- 16-mm Lens RG Camera with IMU: results | raw bag (3.1 G)

2024-09-25

- 8-mm Lens RG Camera with IMU: results | raw bag (1.64 G)

- 16-mm Lens RG Camera with IMU: results | raw bag (1.75 G)

Extrinsic Calibration of Cameras and LiDAR

The extrinsic calibration between the RGB cameras and the LiDAR is performed as follows. We first keep the sensor suite static in a structured outdoor scene, and then record multiple point cloud frames along with the corresponding RGB images. The initial guess for the calibration is provided using the CAD statistics of the sensor suite, followed by finetuning with the Sensors Calibration toolbox that refines the extrinsic parameters.

2024-07-15

- 8-mm Lens RG Camera with LiDAR: results | raw data (17.4 M)

- 16-mm Lens RG Camera with LiDAR: results | raw data (17.9 M)

2024-08-08

- 8-mm Lens RG Camera with LiDAR: results | raw data (19.1 M)

- 16-mm Lens RG Camera with LiDAR: results | raw data (25.4 M)

2024-09-25

- 8-mm Lens RG Camera with LiDAR: results | raw data (22.3 M)

- 16-mm Lens RG Camera with LiDAR: results | raw data (22.7 M)

Extrinsic Parameters Between GNSS/INS and LiDAR Systems

We derived their extrinsic parameters from the CAD installation dimensions.

- Extrinsic Parameters: results