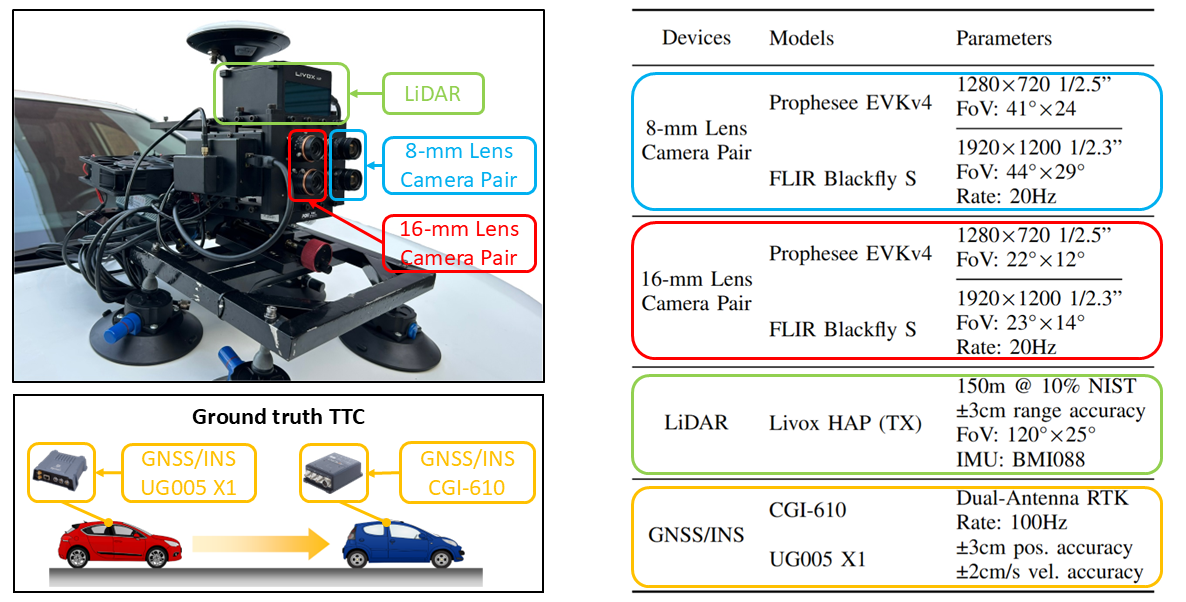

Sensor Suite

The raw data used for estimating TTC contain RGB images and corresponding event streams. To cover a relatively complete sensing range, we employ two groups of RGBEvent camera pair equipped with lens of different focal length. The camera pair using 8-mm lens consists of an RGB camera and an event camera, and it covers the close sensing range. As a complementary, the camera pair using 16-mm lens is used to cover the distant sensing range. In each pair, the event camera and the RGB camera are rigidly attached with a narrow baseline of 4 cm, and thus, the extrinsic parameters (w.r.t the coordinate system of the sensor suite) can be approximately shared, simplifying data fusion across the two sensor modalities. The employed event camera is Prophesee EVK-v4 (sensor size 1/2.5”) that has a spatial resolution of 1280 × 720 pixels. To ensure a similar field of view (FOV), an FLIR Blackfly-S RGB camera (sensor size 1/2.3”) with a 1920×1200 resolution is used and equipped with an identical lens.

The ground-truth data consists of depth information observed from the host vehicle and the involved two vehicles’ motion status (e.g., position, speed, and orientation, etc). To provide these information, we employ a solid-state LiDAR and two high-performance GNSS/INS units. A Livox HAP LiDAR is rigidly attached to the two camera pairs and used for collecting ground-truth depth. As for the GNSS/INS devices, a UG005 X1 device is used on the host vehicle, and a CGI-610 device is used on the lead vehicle, both for providing high-frequency motion status of the platform. Note that international organizations of vehicle safety assessment, such as Euro New Car Assessment Program (NCAP), require the motion status of involved vehicles to be collected at a frequency of no lower than 100 Hz. To this end, both GNSS/INS units are configured to provide motion status at 100 Hz.

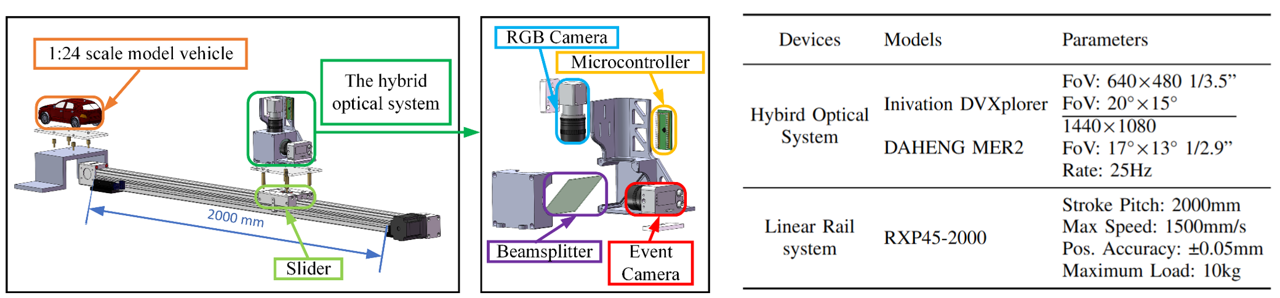

The testbed consists of a hybrid optical system, a linear rail and a 1:24 scale model vehicle. Specifically, the hybrid optical system comprises an DVXplorer event camera, an RGB camera, and a beam splitter. The beam splitter divides the incoming light into two paths, ensuring that both cameras share a unified field of view, facilitating pixel-level correspondence between the event and RGB cameras. To achieve precise time synchronization, a micro-controller is used to generate two synchronized 25 Hz pulse signals, which are employed to simultaneously trigger both cameras. The linear rail has an effective travel distance of 2 meters, with a control system that includes a servomotor, a driver, and an STM32 development board. Besides, a 1:24 scale model vehicle is positioned in front of the camera, being used as the potential collision target. The hybrid optical system is rigidly mounted on the slider, which can be propelled towards the target under accurate control of speed.

Open Source

Design of the CAD model for the small-scale TTC testbed.