EvTTC Benchmark

An Event Camera Dataset for Time-to-Collision Estimation

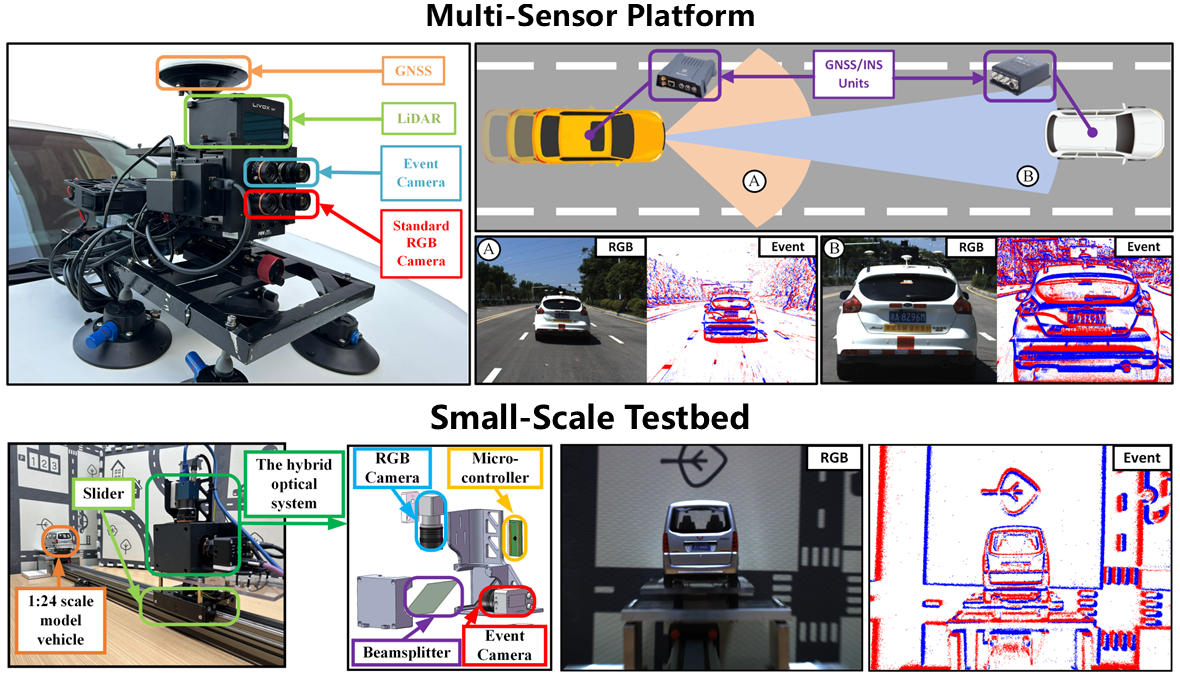

We present an event camera dataset, called EvTTC, for the task of time-to-collision estimation in autonomous driving:

- A diverse set of sequences featuring various targets, such as real vehicles, inflatable vehicles, and dummies, across a wide range of relative speeds, including both routine and challenging situations.

- A low-cost and small-scale TTC testbed that facilitates the generation of quasi-real data at different relative speeds. The design of the testbed is open-source.

- A specific benchmark for the TTC task that can serve as an evaluation platform for the community to test and compare different TTC estimation methods.

News

| Mar. 2, 2025 | Updated annotations of bounding boxes and segmentation for objects/subjects. |

|---|---|

| Mar. 2, 2025 | Updated ground-truth TTC. |

| Feb. 19, 2025 | Updated detailed results of the TTC Benchmark. |

| Feb. 4, 2025 | All parameters of the Prophesee EVK4 are now available. |

| Feb. 3, 2025 | Depth maps for all sequences are now available. |

| Dec. 5, 2024 | Watch our video on Youtube. |

| Nov. 5, 2024 | EvTTC Benchmark goes live! |

BibTeX

Please cite the following publication when using this benchmark in an academic context:

@misc{sun2024aneventcameradatasetfortime-to-collisionestion,

title = {EvTTC: An Event Camera Dataset for Time-to-Collision Estimation},

author = {Kaizhen Sun and Jinghang Li and Kuan Dai and Bangyan Liao and Wei Xiong and Yi Zhou},

year = 2024,

eprint = {2412.05053},

archiveprefix = {arXiv},

primaryclass = {cs.CV}

}